S3 Event Imports

In addition to the Measurement API and Web Measurement SDK, Gamesight also supports ingesting Events through S3 Event Imports. With this system, you can deliver Event data to Gamesight by regularly writing files to your S3-compatible object store. In some cases this is an easier path to integration if you already have a central telemetry datastore that can be used to export Events in bulk.

S3 Event Imports support multiple different cloud providers' S3-compatible object stores. You can find specific guides depending on your cloud provider below:

Specification

The S3 Event Import system is designed to be flexible for different file formats, delivery frequencies, and more to ensure that it can work with your existing data infrastructure. There following requirements:

- Delivery Frequency - You can deliver files at whatever frequency you prefer (daily, hourly, every few minutes, etc). By default, we will scan for new files every 5 minutes and process any new files for import.

- Immutable Objects - Each file you write to object storage must be immutable. Once we scan an individual file we will not reprocess it if it is updated. If you need to amend data in a file please write a new file and use the

transaction_idfield for deduplication if necessary. - Partitioning Structure - You are free to use whatever partition or prefix structure that you would like for the files you deliver. The one requirement is that the prefix must contain a date component such that we can limit our bucket scan to specific files by date using the prefix. For example, all of the following are valid partition structures:

# Daily partitioning

events/dt=YYYY-MM-DD/some-file.csv

myprefix/YYYYMMDD/HHMM/events-0001.csv

# Timestamp in file name

export-YYYY-MM-DDTHH:MM:SS.csv

YYYYMMDD-HHMMSS.csv- If you are unable to include a time component in your prefix, we can accept a file delivery without time in the prefix if you include a lifecycle rule to automatically delete old files after 72 hours. The S3 Event Import system has a limit of 1,440 files it will process per partition or prefix, so the lifecycle rule is necessary to avoid hitting this limit.

- File Format - We support the following file formats:

json- Newline separated json objects. Each line containing the equivalent payload as supported by the /events API.csv- CSV file with a header on the first row. Supported format outlined in CSV Formatavro- Each line containing the equivalent payload as supported by the /events API.

- Required Fields - Each event is required to include a

user_id,type, andtimestamp. In addition it is highly recommended that you include a unique ID for each event in thetransaction_idfield to ensure event ingest is idempotent.

Options

In addition to the requirements outlined above, there are a number of optional settings which can be configured to customize your ingest if needed.

- Scan Frequency - By default you bucket is scanned every 5 minutes for new files.

- Scan Depth - By default, partitions for the last 72 hours are scanned for new files. This means data with a partition or prefix for a time before 72 hours ago will not be automatically ingested.

Please let us know if you would like to configure any of these options on your data delivery and we'd be happy to confirm.

Limits

There are a few limits in place to protect from misconfigurations. Please keep these in mind as you are designing your data format.

| Field | Description | Default | Configurable |

|---|---|---|---|

| Files Per Day | Maximum number of files per daily partition that Gamesight will process | 1,440 | Yes |

| Maximum File Size | Largest file size that Gamesight will process for import | 100MiB | No |

| Maximum Errors per File | Limit to the number of Events that can contain errors before the file is skipped | 1000 | No |

AWS Getting Started

To get started with S3 Event Import you'll need to set up an S3 bucket in your AWS account and provide Gamesight with access.

Create the Bucket

Feel free to use your favorite IaC tool (Cloudformation, Terraform, etc), but if you want to do things in the AWS console here is a quick overview of how to get that setup.

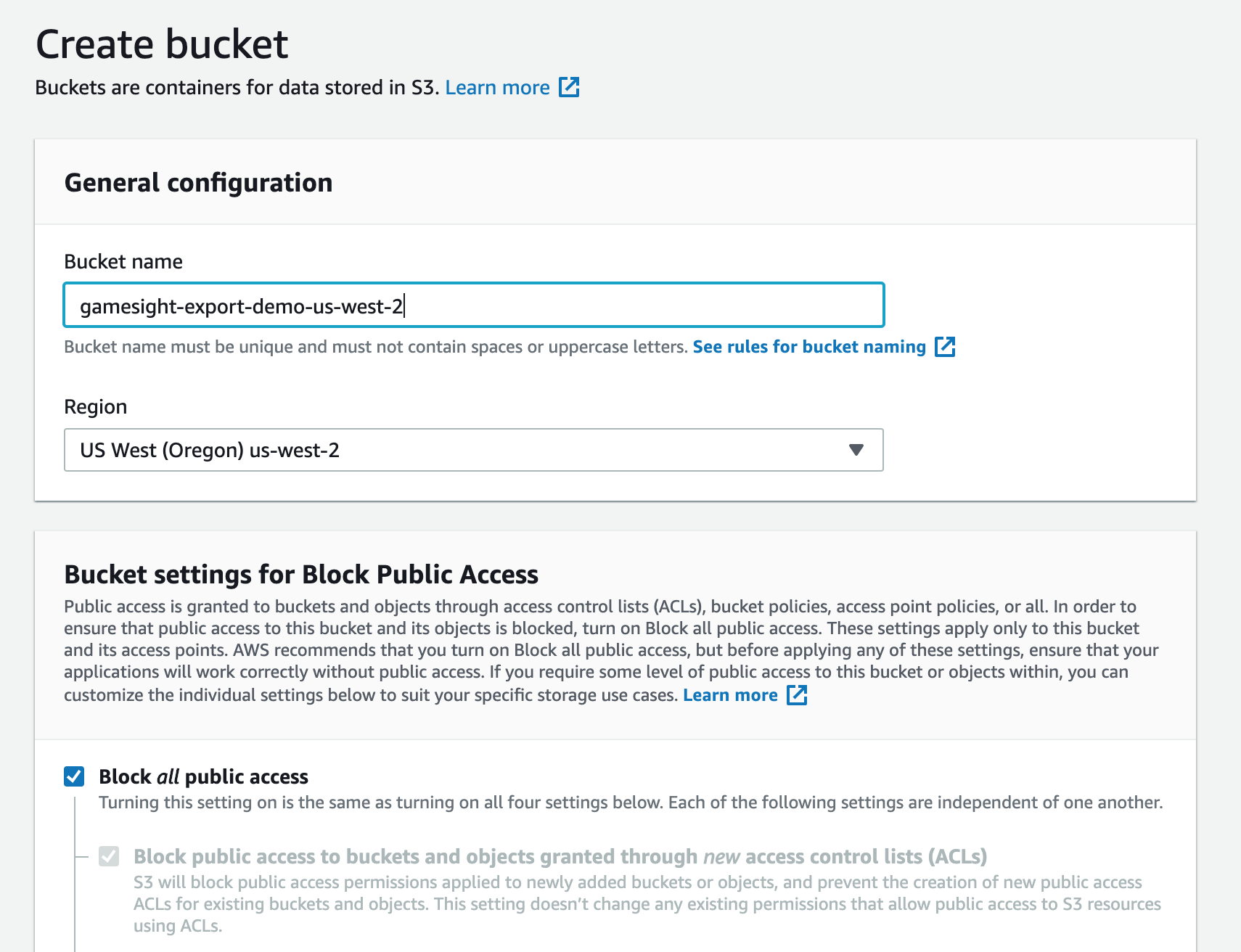

First, click "Create Bucket" on the AWS S3 Console and fill out the form. Take note of the Bucket name and Region as we will need those values later.

Also, please leave the "Block all public access" option checked to prevent accidental data exposure.

Create IAM Role

The next step is to create an IAM role with read access into the bucket. Gamesight will use this role when running imports.

Head over to the IAM Console -> Roles and click "Create Role".

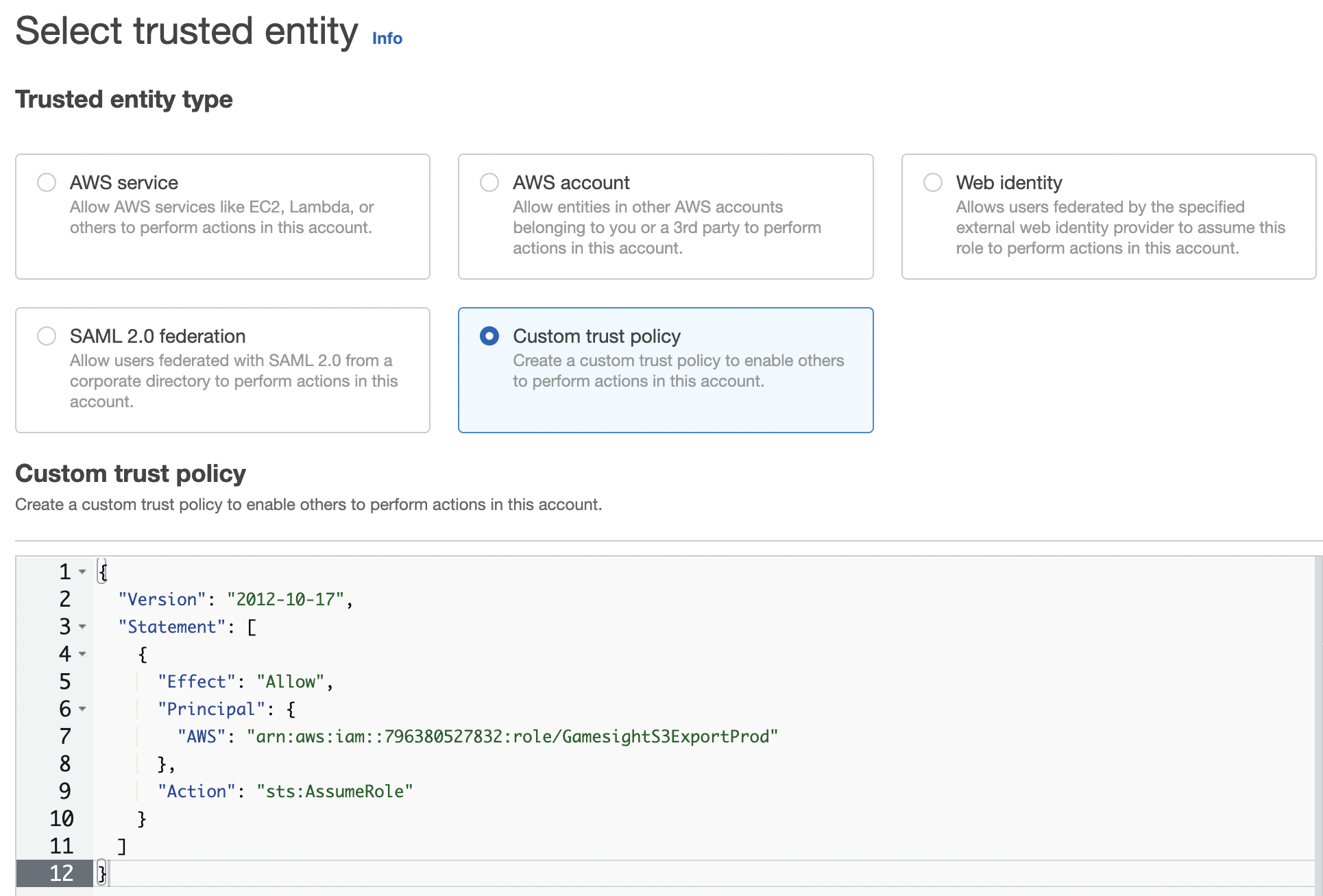

Select "Custom trust policy" as the Trusted entity type and paste the following trust policy.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::796380527832:role/GamesightS3ImportProd"

},

"Action": "sts:AssumeRole"

}

]

}

NoteDedicated Deployment Customers

If your Game is running in a Dedicated environment, please contact us before setting the Trust Policy on your import Role. The permissions for your unload will be slightly different that the ones documented above.

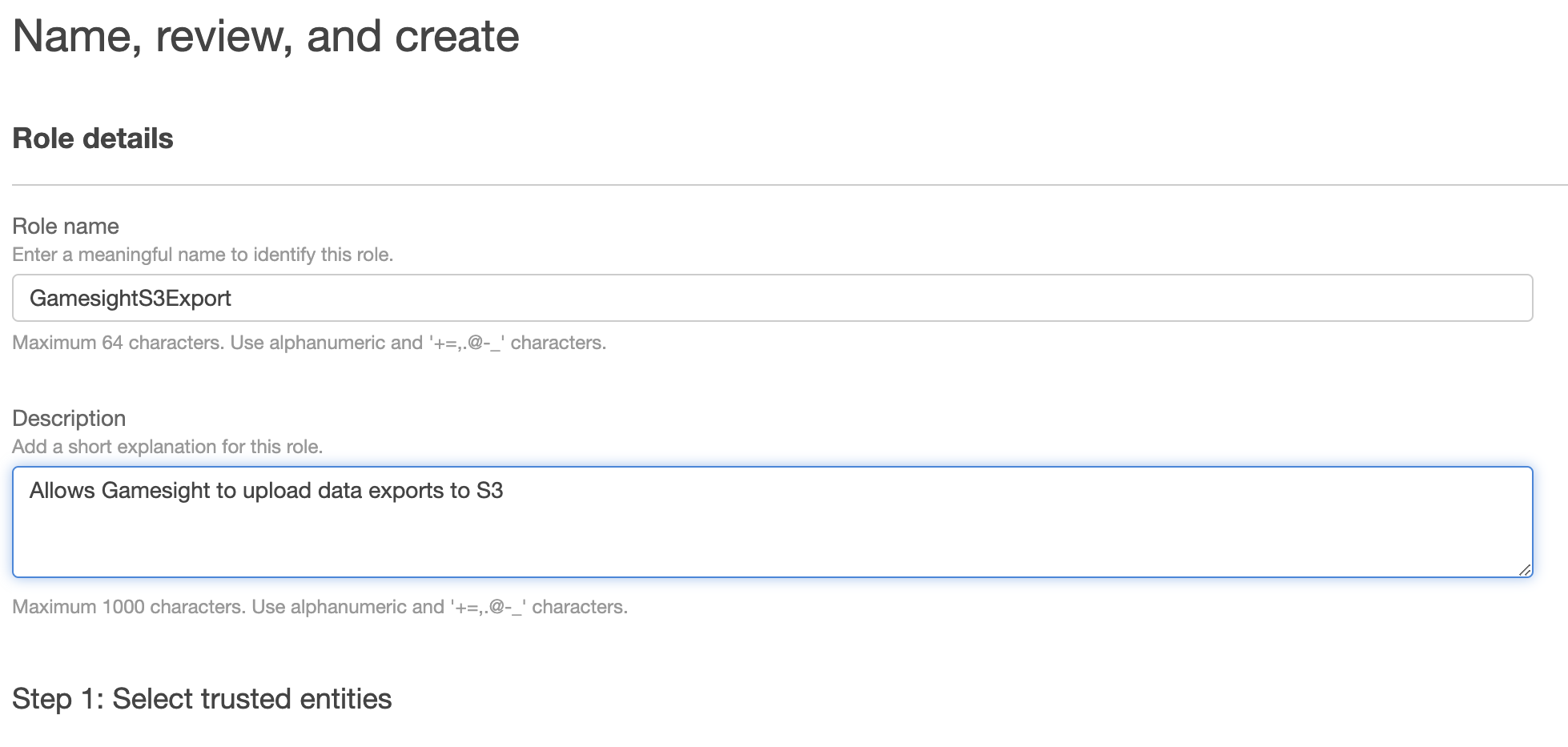

Continue through the Role creation flow skipping the Policy attachment step and filling in whatever tags you need to for your org.

You should reach this screen where you can provide a nice descriptive name and description for your role. Click "Create Role" and navigate to your new role.

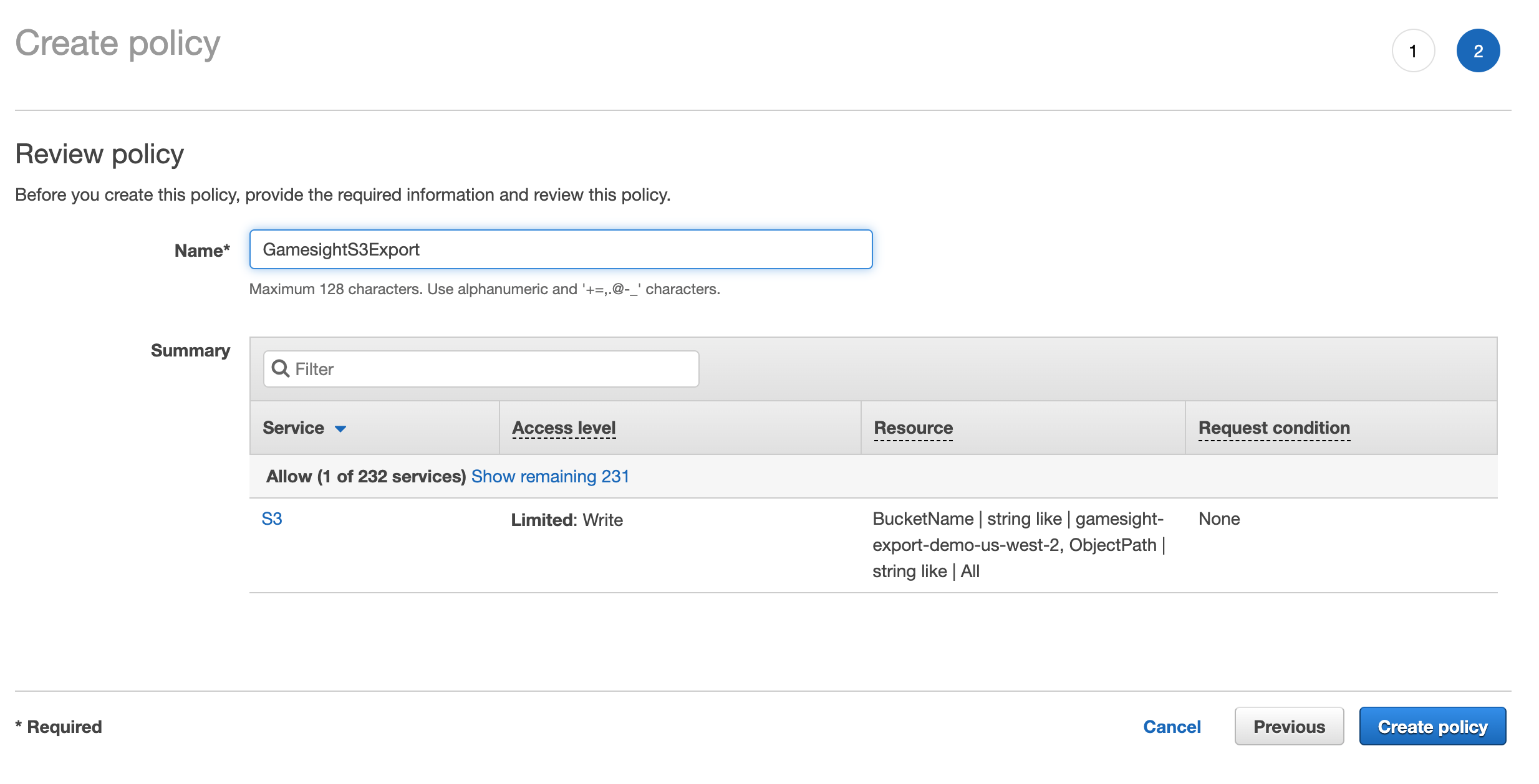

Attach the Policy

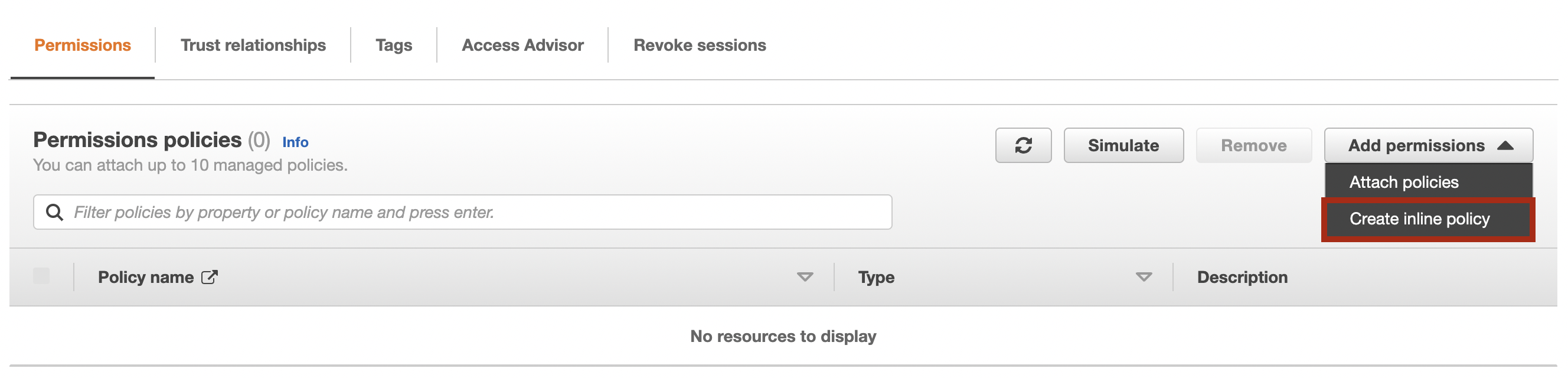

Now we need to attach a policy to our new role enabling it to write into the S3 bucket we created. Head over to your role and click "Add inline policy"

When prompted fill in the following Policy to the JSON tab

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::YOUR-BUCKET-NAME-HERE/*",

"arn:aws:s3:::YOUR-BUCKET-NAME-HERE"

]

}

]

}Be sure to replace YOUR-BUCKET-NAME-HERE with the actual name of your bucket. Give the policy a name and save it to attach it to your role.

That's it! The last step is to just send some details over to us so we can enable the import for your account.

Finish Up

Once you have completed the above steps, please contact us through live chat or email us at [email protected] and we can finish off the process required to get your reporting live. Please include the following pieces of information with your request: bucket name, bucket region, and role ARN.

Here is an example of each of those values:

- Bucket name: gamesight-import-demo-us-west-2

- Bucket region: us-west-2

- Role ARN: arn:aws:iam::123456789012:role/GamesightS3Import

- Partition Structure: events/dt=YYYY-MM-DD

- File Format: csv

GCP Getting Started

To get started with S3 Event Import in Google Cloud Storage you'll need to set up an GCS bucket in your GCP account and provide Gamesight with access.

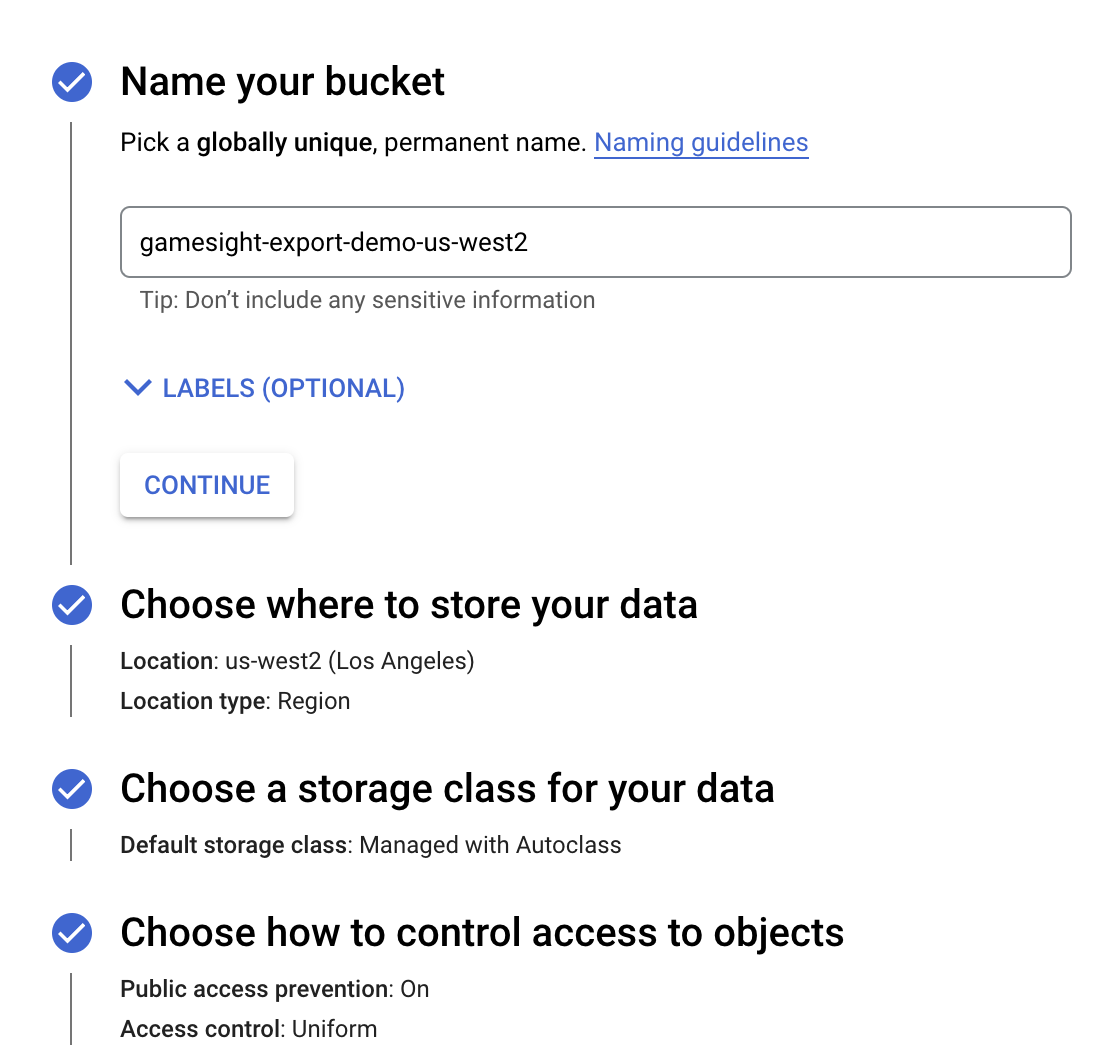

Create the Bucket

Feel free to use your favorite IaC tool (Terraform, etc), but if you want to do things in the GCP console here is a quick overview of how to get that setup.

First, click the "Create" button on the Cloud Storage Console and fill out the form. Take note of the Bucket name as we will need this value later.

Also, please leave the "Enforce public access prevention on this bucket" option checked to prevent accidental data exposure.

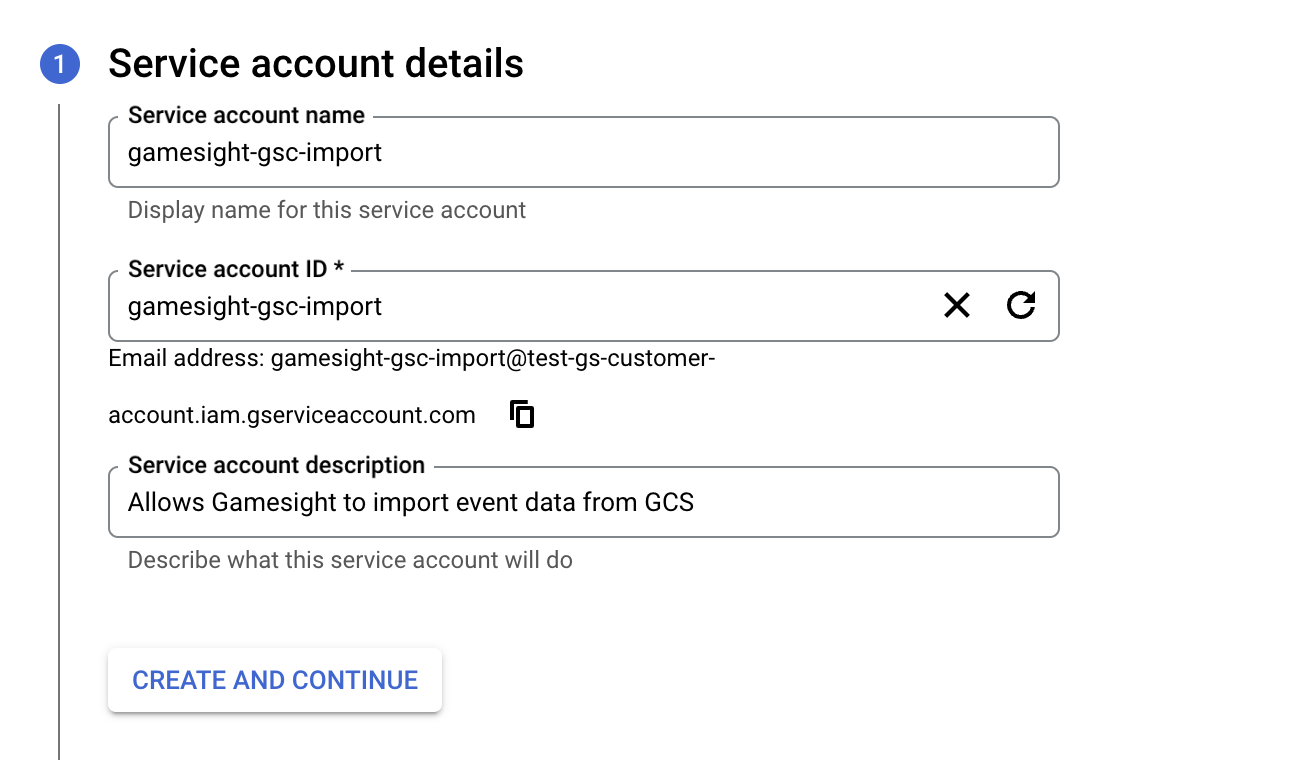

Create Service Account

Next, you will create a Service Account that Gamesight can use to write objects into your new bucket. Detailed instructions for this process can be found in Google's documentation.

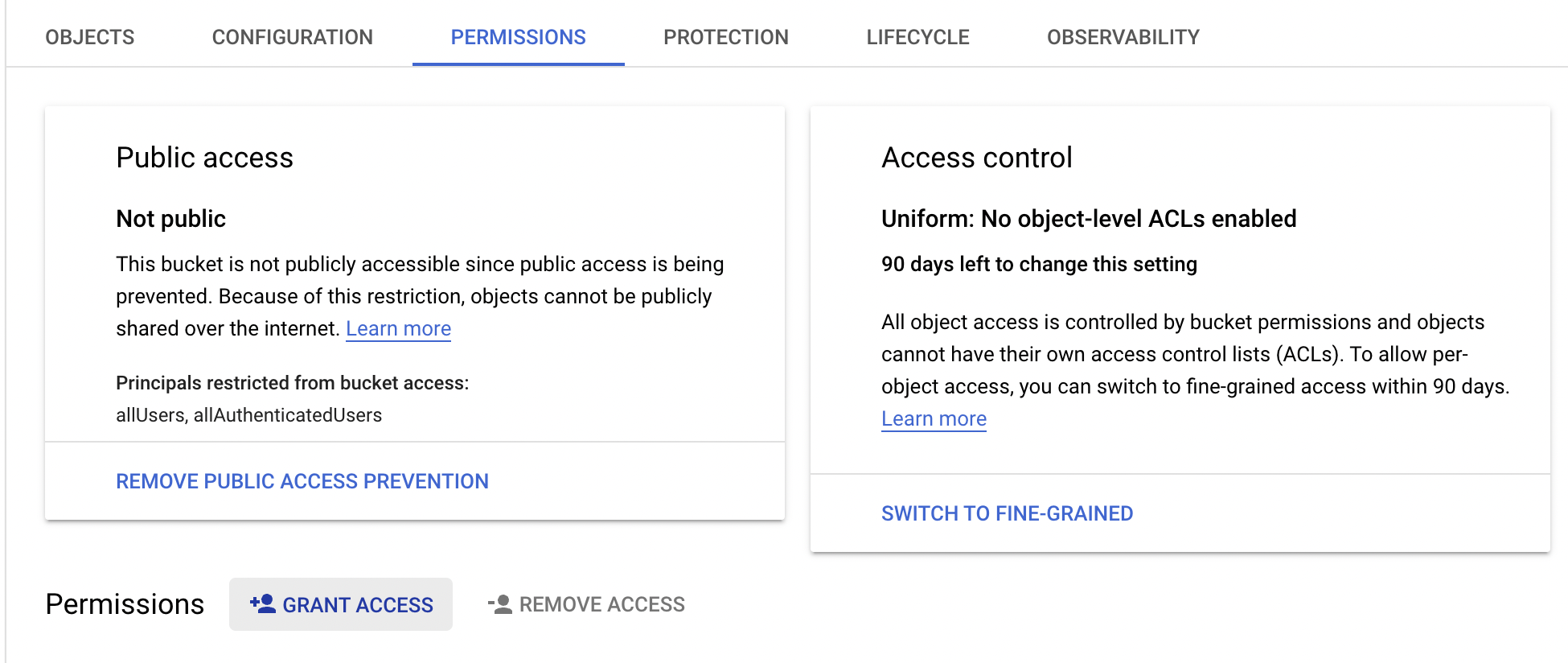

Assign Permissions

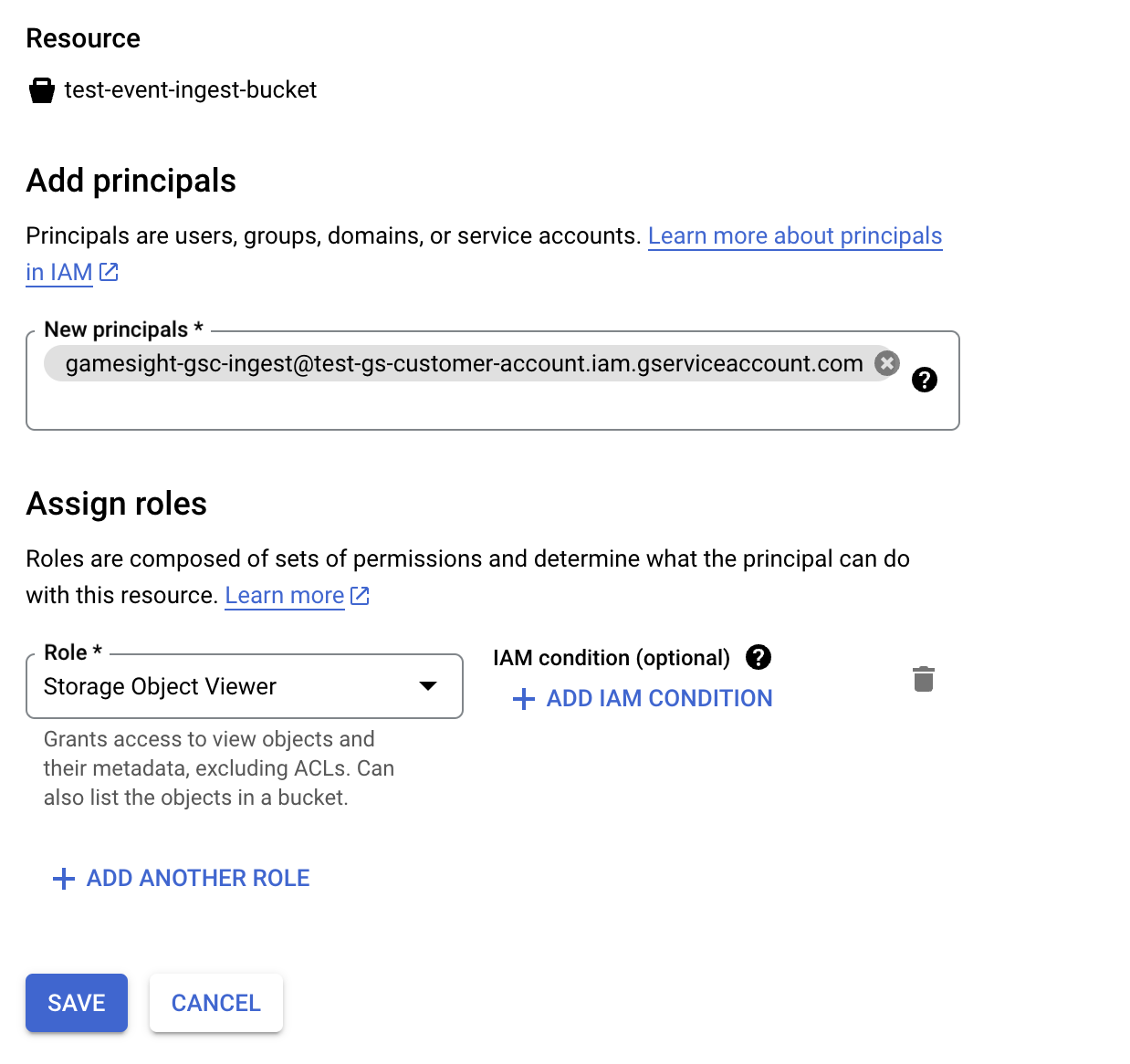

Once your service account is created, we will want to assign IAM permissions that allow it to write data into your bucket.

On the Permissions tab for your bucket, press the "Grant Access" button to begin assigning a role to your new service account.

Type in the full name for the service account you created in the previous step and grant it the "Storage Object Admin" role which permits this service account to write and overwrite objects in this bucket.

Generate HMAC Key

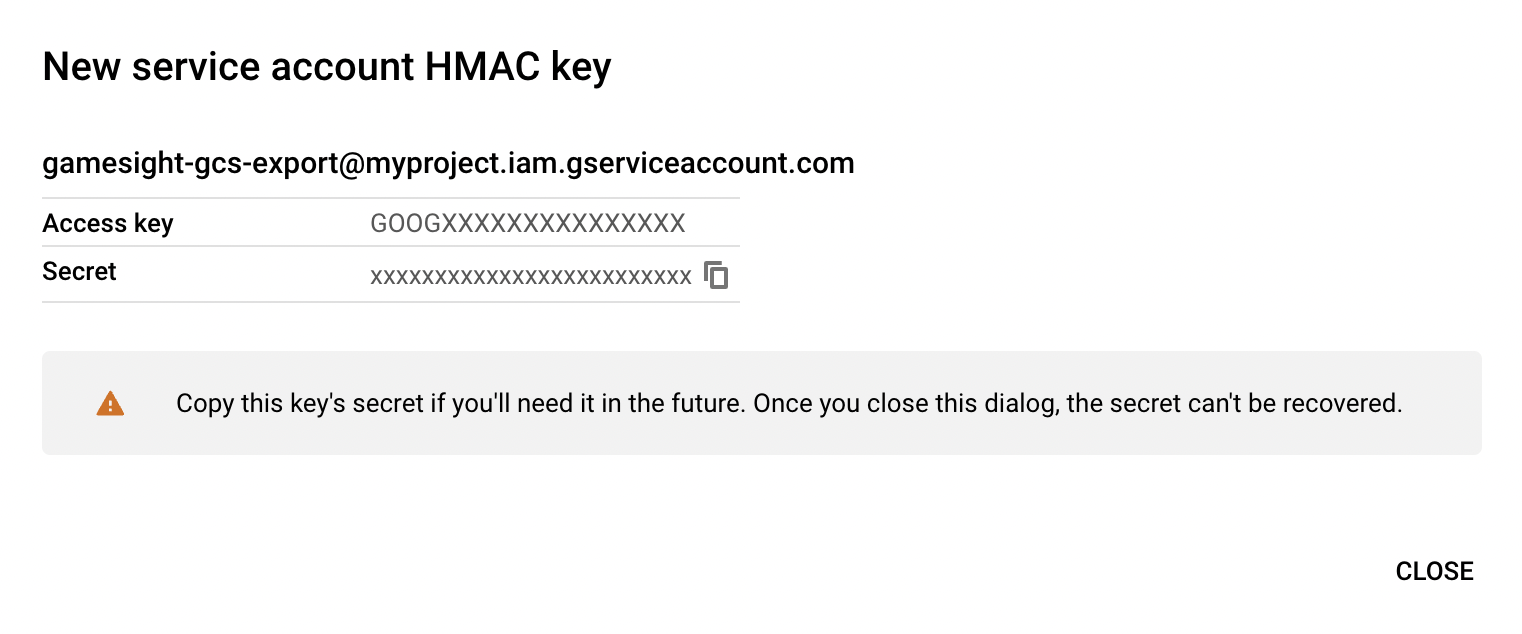

The final step in to generate HMAC keys for our service account so Gamesight can assume this role when writing data to your bucket.

Head over to the Cloud Storage Console > Settings > Interoperability and press the "Create a key for a service account" button. Select your service account and press new key to generate a new key.

Copy down both the Access key and Secret

Finish Up

Once you have completed the above steps, please contact your account rep and we can finish off the process required to get your reporting live.

You will need to include the following pieces of information with your request: bucket name, access key, secret. Due to the sensitive nature of these credentials we ask that you use a secure transform mechanism such as a one time link to share these details (such as 1Password or onetimesecret).

Here is an example of each of those values:

- Bucket name: gamesight-import-demo-us-west2

- Access key: GOOGXXXXX

- Secret: XXXXXXXXX

- Partition Structure: events/dt=YYYY-MM-DD

- File Format: csv

File Formats

json,avro, csv, and tsv file formats are accepted. You can read more about required event fields and their usage in the /events endpoint documentation.

The following fields are supported for csv and tsv file imports:

| Field | Required | Example |

|---|---|---|

type | Yes | game_launch |

user_id | Yes | user123 |

timestamp | Yes | 2020-01-01T00:00:00 |

transaction_id | Yes | b214210d-034b-421a-9b78-63a93c7c448c |

identifiers.ip | Recommended | 123.123.123.123 |

identifiers.os | Windows 10 | |

identifiers.resolution | 1920x1080 | |

identifiers.language | en | |

identifiers.timezone | -480 | |

identifiers.sku | console_bundle1 | |

identifiers.platform | steam | |

identifiers.platform_device_id | 5dea5330-7d05-426b-a0bd-dbe3459b7d52 | |

identifiers.gsid | 2c5a5a68-e434-4509-86e0-267323b0aac7 | |

identifiers.useragent | Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36 | |

identifiers.country | DE | |

revenue_currency | USD | |

revenue_amount | 1.00 | |

gameplay_session_id | e14a898d-e69d-4b61-ada6-5c70c198ccba | |

metadata | {..} - arbitrary json object | |

| external_ids | [..] - json serialized external ID data | |

consent_scopes.processing | 1/0 or true/false | |

consent_scopes.attribution | 1/0 or true/false | |

consent_scopes.postbacks | 1/0 or true/false |

Updated 5 months ago